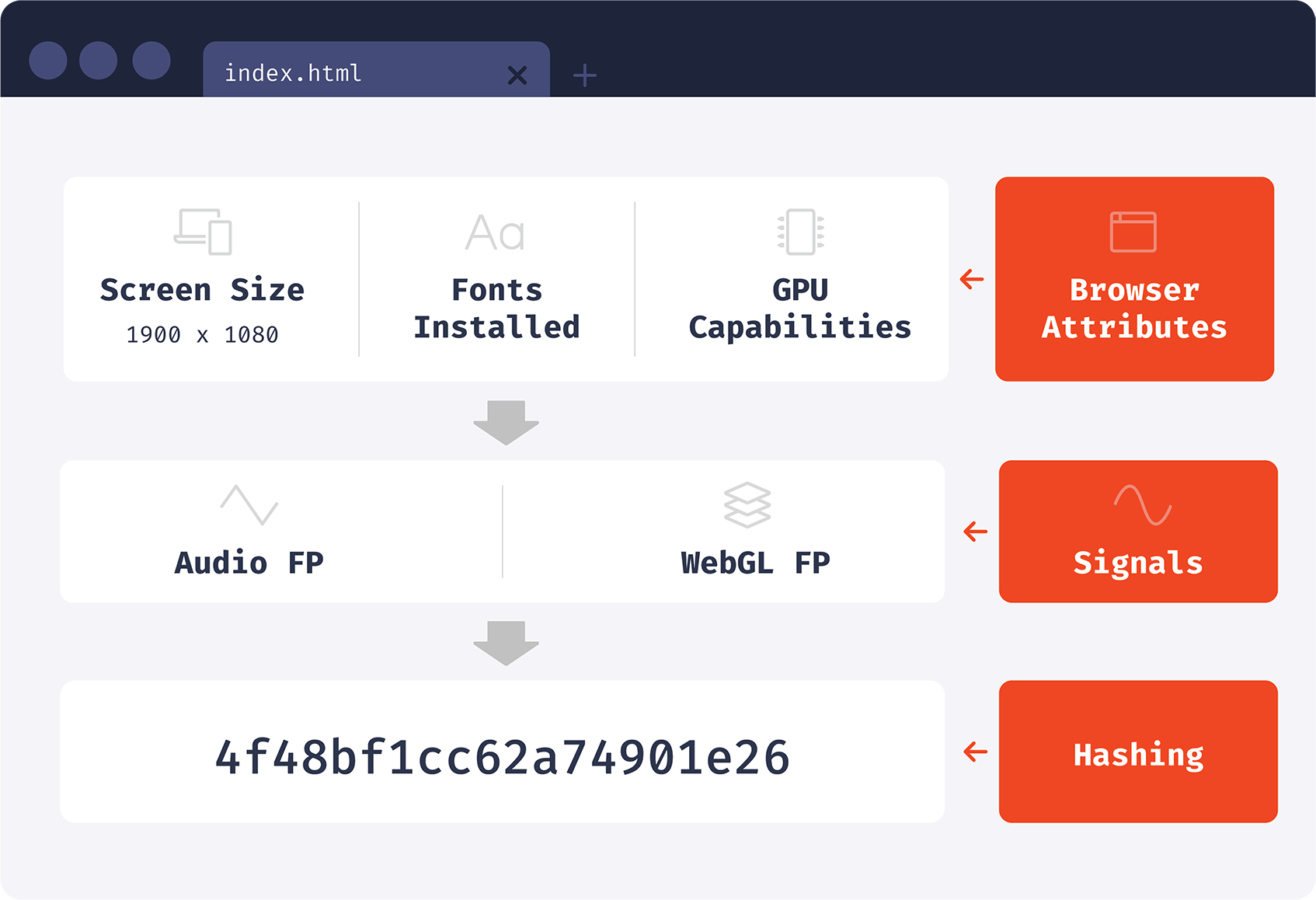

Did you know that you can identify web browsers without using cookies or asking for permissions?

This is known as “browser fingerprinting” and it works by reading browser attributes and combining them together into a single identifier. This identifier is stateless and works well in normal and incognito modes.

When generating a browser identifier, we can read browser attributes directly or use attribute processing techniques first. One of the creative techniques that we’ll discuss today is audio fingerprinting.

Audio fingerprinting is a valuable technique because it is relatively unique and stable. Its uniqueness comes from the internal complexity and sophistication of the Web Audio API. The stability is achieved because the audio source that we’ll use is a sequence of numbers, generated mathematically. Those numbers will later be combined into a single audio fingerprint value.

Before we dive into the technical implementation, we need to understand a few ideas from the Web Audio API and its building blocks.

A brief overview of the Web Audio API

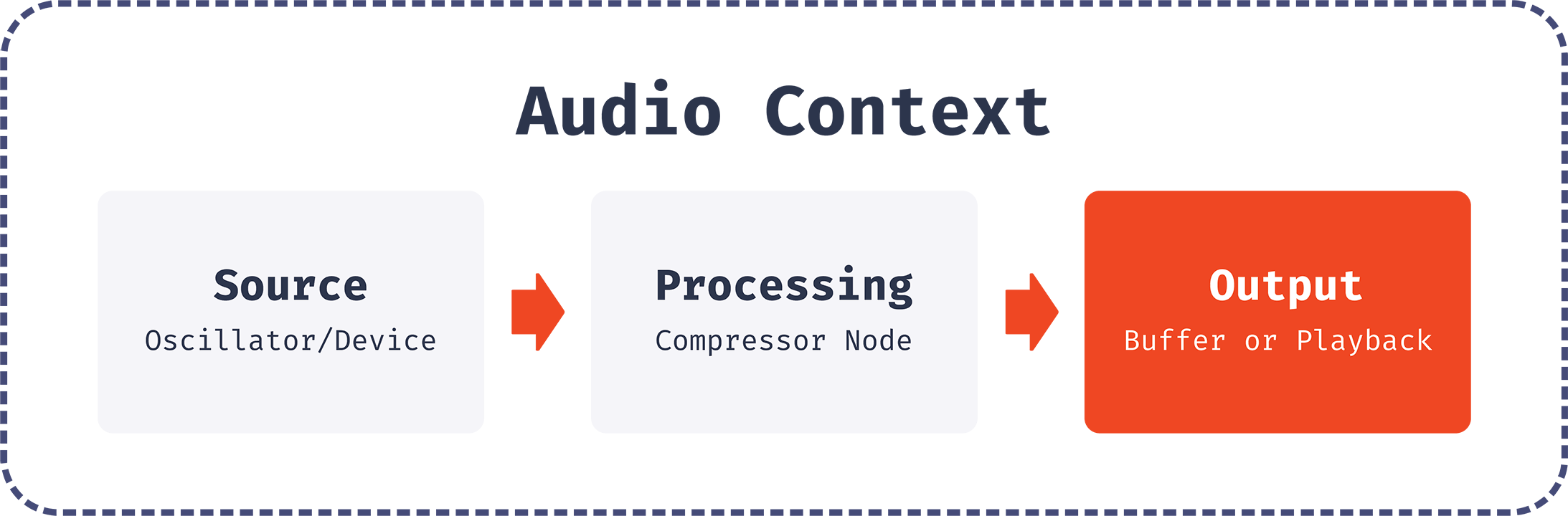

The Web Audio API is a powerful system for handling audio operations. It is designed to work inside an AudioContext by linking together audio nodes and building an audio graph. A single AudioContext can handle multiple types of audio sources that plug into other nodes and form chains of audio processing.

A source can be an audio element, a stream, or an in-memory source generated mathematically with an Oscillator. We’ll be using the Oscillator for our purposes and then connecting it to other nodes for additional processing.

Before we dive into the audio fingerprint implementation details, it’s helpful to review all of the building blocks of the API that we’ll be using.

AudioContext

AudioContext represents an entire chain built from audio nodes linked together. It controls the creation of the nodes and execution of the audio processing. You always start by creating an instance of AudioContext before you do anything else. It’s a good practice to create a single AudioContext instance and reuse it for all future processing.

AudioContext has a destination property that represents the destination of all audio from that context.

There also exists a special type of AudioContext: OfflineAudioContext. The main difference is that it does not render the audio to the device hardware. Instead, it generates the audio as fast as possible and saves it into an AudioBuffer. Thus, the destination of the OfflineAudioContext will be an in-memory data structure, while with a regular AudioContext, the destination will be an audio-rendering device.

When creating an instance of OfflineAudioContext, we pass 3 arguments: the number of channels, the total number of samples and a sample rate in samples per second.

const AudioContext =

window.OfflineAudioContext ||

window.webkitOfflineAudioContext

const context = new AudioContext(1, 5000, 44100)

AudioBuffer

An AudioBuffer represents an audio snippet, stored in memory. It’s designed to hold small snippets. The data is represented internally in Linear PCM with each sample represented by a 32-bit float between -1.0 and 1.0. It can hold multiple channels, but for our purposes we’ll use only one channel.

Oscillator

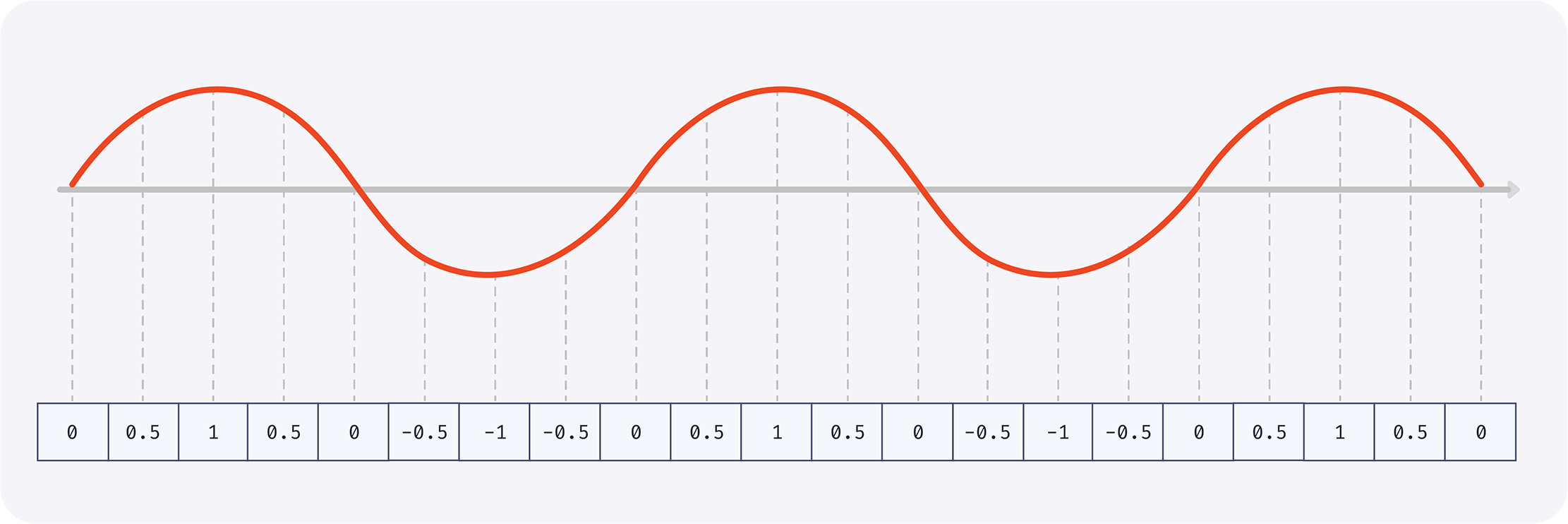

When working with audio, we always need a source. An oscillator is a good candidate, because it generates samples mathematically, as opposed to playing an audio file. In its simplest form, an oscillator generates a periodic waveform with a specified frequency.

The default shape is a sine wave.

It’s also possible to generate other types of waves, such as square, sawtooth, and triangle.

The default frequency is 440 Hz, which is a standard A4 note.

Compressor

The Web Audio API provides a DynamicsCompressorNode, which lowers the volume of the loudest parts of the signal and helps prevent distortion or clipping.

DynamicsCompressorNode has many interesting properties that we’ll use. These properties will help create more variability between browsers.

Threshold - value in decibels above which the compressor will start taking effect.

Knee - value in decibels representing the range above the threshold where the curve smoothly transitions to the compressed portion.

Ratio - amount of input change, in dB, needed for a 1 dB change in the output.

Reduction - float representing the amount of gain reduction currently applied by the compressor to the signal.

Attack - the amount of time, in seconds, required to reduce the gain by 10 dB. This value can be a decimal.

Release - the amount of time, in seconds, required to increase the gain by 10 dB.

How the audio fingerprint is calculated

Now that we have all the concepts we need, we can start working on our audio fingerprinting code.

Safari doesn’t support unprefixed OfflineAudioContext, but does support

webkitOfflineAudioContext, so we’ll use this method to make it work in Chrome and Safari:

const AudioContext =

window.OfflineAudioContext ||

window.webkitOfflineAudioContex

Now we create an AudioContext instance. We’ll use one channel, a 44,100 sample rate and 5,000 samples total, which will make it about 113 ms long.

const context = new AudioContext(1, 5000, 44100)

Next let’s create a sound source - an oscillator instance. It will generate a triangular-shaped sound wave that will fluctuate 1,000 times per second (1,000 Hz).

const oscillator = context.createOscillator()

oscillator.type = "triangle"

oscillator.frequency.value = 1000

Now let’s create a compressor to add more variety and transform the original signal. Note that the values for all these parameters are arbitrary and are only meant to change the source signal in interesting ways. We could use other values and it would still work.

const compressor = context.createDynamicsCompressor()

compressor.threshold.value = -50

compressor.knee.value = 40

compressor.ratio.value = 12

compressor.reduction.value = 20

compressor.attack.value = 0

compressor.release.value = 0.2

Let’s connect our nodes together: oscillator to compressor, and compressor to the context destination.

oscillator.connect(compressor)

compressor.connect(context.destination);

It is time to generate the audio snippet. We’ll use the oncomplete event to get the result when it’s ready.

oscillator.start()

context.oncomplete = event => {

// We have only one channel, so we get it by index

const samples = event.renderedBuffer.getChannelData(0)

};

context.startRendering()

Samples is an array of floating-point values that represents the uncompressed sound. Now we need to calculate a single value from that array.

Let’s do it by simply summing up a slice of the array values:

function calculateHash(samples) {

let hash = 0

for (let i = 0; i < samples.length; ++i) {

hash += Math.abs(samples[i])

}

return hash

}

console.log(getHash(samples))

Now we are ready to generate the audio fingerprint. When I run it on Chrome on MacOS I get the value:

- 101.45647543197447

That’s all there is to it. Our audio fingerprint is this number!

You can check out a production implementation in our open source browser fingerprinting library.

If I try executing the code in Safari, I get a different number:

- 79.58850509487092

And get another unique result in Firefox:

- 80.95458510611206

Every browser we have on our testing laptops generate a different value. This value is very stable and remains the same in incognito mode.

This value depends on the underlying hardware and OS, and in your case may be different.

Why the audio fingerprint varies by browser

Let’s take a closer look at why the values are different in different browsers. We’ll examine a single oscillation wave in both Chrome and Firefox.

First, let’s reduce the duration of our audio snippet to 1/2000th of a second, which corresponds to a single wave and examine the values that make up that wave.

We need to change our context duration to 23 samples, which roughly corresponds to a 1/2000th of a second. We’ll also skip the compressor for now and only examine the differences of the unmodified oscillator signal.

const context = new AudioContext(1, 23, 44100)

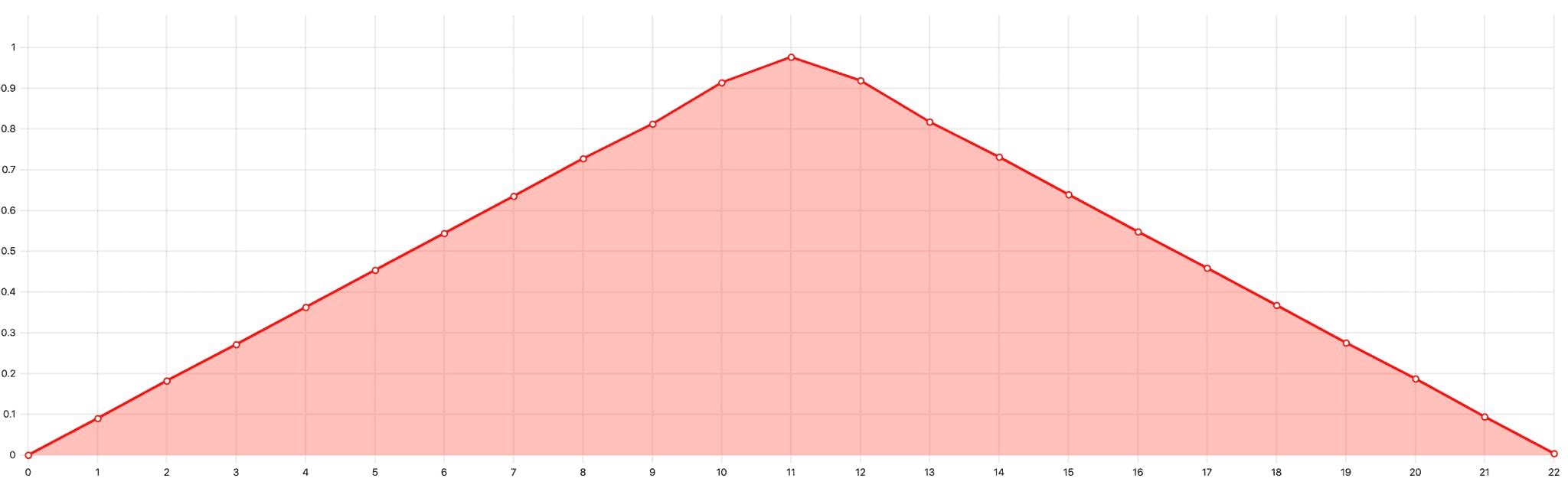

Here is how a single triangular oscillation looks in both Chrome and Firefox now:

However the underlying values are different between the two browsers (I’m showing only the first 3 values for simplicity):

| Chrome: | Firefox: |

| 0.08988945186138153 | 0.09155717492103577 |

| 0.18264609575271606 | 0.18603470921516418 |

| 0.2712443470954895 | 0.2762767672538757 |

Let’s take a look at this demo to visually see those differences.

Historically, all major browser engines (Blink, WebKit, and Gecko) based their Web Audio API implementations on code that was originally developed by Google in 2011 and 2012 for the WebKit project.

Examples of Google contributions to the Webkit project include: creation of OfflineAudioContext, creation of OscillatorNode, creation of DynamicsCompressorNode.

Since then browser developers have made a lot of small changes. These changes, compounded by the large number of mathematical operations involved, lead to fingerprinting differences. Audio signal processing uses floating point arithmetic, which also contributes to discrepancies in calculations.

You can see how these things are implemented now in the three major browser engines:

- Blink: oscillator, dynamics compressor

- WebKit: oscillator, dynamics compressor

- Gecko: oscillator, dynamics compressor

Additionally, browsers use different implementations for different CPU architectures and OSes to leverage features like SIMD. For example, Chrome uses a separate fast Fourier transform implementation on macOS (producing a different oscillator signal) and different vector operation implementations on different CPU architectures (which are used in the DynamicsCompressor implementation). These platform-specific changes also contribute to differences in the final audio fingerprint.

Fingerprint results also depend on the Android version (it’s different in Android 9 and 10 on the same devices for example).

According to browser source code, audio processing doesn’t use dedicated audio hardware or OS features—all calculations are done by the CPU.

Pitfalls

When we started to use audio fingerprinting in production, we aimed to achieve good browser compatibility, stability and performance. For high browser compatibility, we also looked at privacy-focused browsers, such as Tor and Brave.

OfflineAudioContext

As you can see on caniuse.com, OfflineAudioContext works almost everywhere. But there are some cases that need special handling.

The first case is iOS 11 or older. It does support OfflineAudioContext, but the rendering only starts if triggered by a user action, for example by a button click. If context.startRendering is not triggered by a user action, the context.state will be suspended and rendering will hang indefinitely unless you add a timeout. There are not many users who still use this iOS version, so we decided to disable audio fingerprinting for them.

The second case are browsers on iOS 12 or newer. They can reject starting audio processing if the page is in the background. Luckily, browsers allow you to resume the processing when the page returns to the foreground. When the page is activated, we attempt calling context.startRendering() several times until the context.state becomes running. If the processing doesn’t start after several attempts, the code stops. We also use a regular setTimeout on top of our retry strategy in case of an unexpected error or freeze. You can see a code example here.

Tor

In the case of the Tor browser, everything is simple. Web Audio API is disabled there, so audio fingerprinting is not possible.

Brave

With Brave, the situation is more nuanced. Brave is a privacy-focused browser based on Blink. It is known to slightly randomize the audio sample values, which it calls “farbling”.

Farbling is Brave’s term for slightly randomizing the output of semi-identifying browser features, in a way that’s difficult for websites to detect, but doesn’t break benign, user-serving websites. These “farbled” values are deterministically generated using a per-session, per-eTLD+1 seed so that a site will get the exact same value each time it tries to fingerprint within the same session, but that different sites will get different values, and the same site will get different values on the next session. This technique has its roots in prior privacy research, including the PriVaricator (Nikiforakis et al, WWW 2015) and FPRandom (Laperdrix et al, ESSoS 2017) projects.

Brave offers three levels of farbling (users can choose the level they want in settings):

- Disabled — no farbling is applied. The fingerprint is the same as in other Blink browsers such as Chrome.

- Standard — This is the default value. The audio signal values are multiplied by a fixed number, called the “fudge” factor, that is stable for a given domain within a user session. In practice it means that the audio wave sounds and looks the same, but has tiny variations that make it difficult to use in fingerprinting.

- Strict — the sound wave is replaced with a pseudo-random sequence.

The farbling modifies the original Blink AudioBuffer by transforming the original audio values.

Reverting Brave standard farbling

To revert the farbling, we need to obtain the fudge factor first. Then we can get back the original buffer by dividing the farbled values by the fudge factor:

async function getFudgeFactor() {

const context = new AudioContext(1, 1, 44100)

const inputBuffer = context.createBuffer(1, 1, 44100)

inputBuffer.getChannelData(0)[0] = 1

const inputNode = context.createBufferSource()

inputNode.buffer = inputBuffer

inputNode.connect(context.destination)

inputNode.start()

// See the renderAudio implementation

// at https://git.io/Jmw1j

const outputBuffer = await renderAudio(context)

return outputBuffer.getChannelData(0)[0]

}

const [fingerprint, fudgeFactor] = await Promise.all([

// This function is the fingerprint algorithm described

// in the “How audio fingerprint is calculated” section

getFingerprint(),

getFudgeFactor(),

])

const restoredFingerprint = fingerprint / fudgeFactor

Unfortunately, floating point operations lack the required precision to get the original samples exactly. The table below shows restored audio fingerprint in different cases and shows how close they are to the original values:

| OS, browser | Fingerprint | Absolute difference between the target fingerprint |

| macOS 11, Chrome 89 (the target fingerprint) | 124.0434806260746 | n/a |

| macOS 11, Brave 1.21 (same device and OS) | Various fingerprints after browser restarts: 124.04347912294482 124.0434832855703 124.04347889351203 124.04348024313667 | 0.00000014% – 0.00000214% |

| Windows 10, Chrome 89 | 124.04347527516074 | 0.00000431% |

| Windows 10, Brave 1.21 | Various fingerprints after browser restarts: 124.04347610535537 124.04347187270707 124.04347220244154 124.04347384813703 | 0.00000364% – 0.00000679% |

| Android 11, Chrome 89 | 124.08075528279005 | 0.03% |

| Android 9, Chrome 89 | 124.08074500028306 | 0.03% |

| ChromeOS 89 | 124.04347721464 | 0.00000275% |

| macOS 11, Safari 14 | 35.10893232002854 | 71.7% |

| macOS 11, Firefox 86 | 35.7383295930922 | 71.2% |

As you can see, the restored Brave fingerprints are closer to the original fingerprints than to other browsers’ fingerprints. This means that you can use a fuzzy algorithm to match them. For example, if the difference between a pair of audio fingerprint numbers is more than 0.0000022%, you can assume that these are different devices or browsers.

Performance

Web Audio API rendering

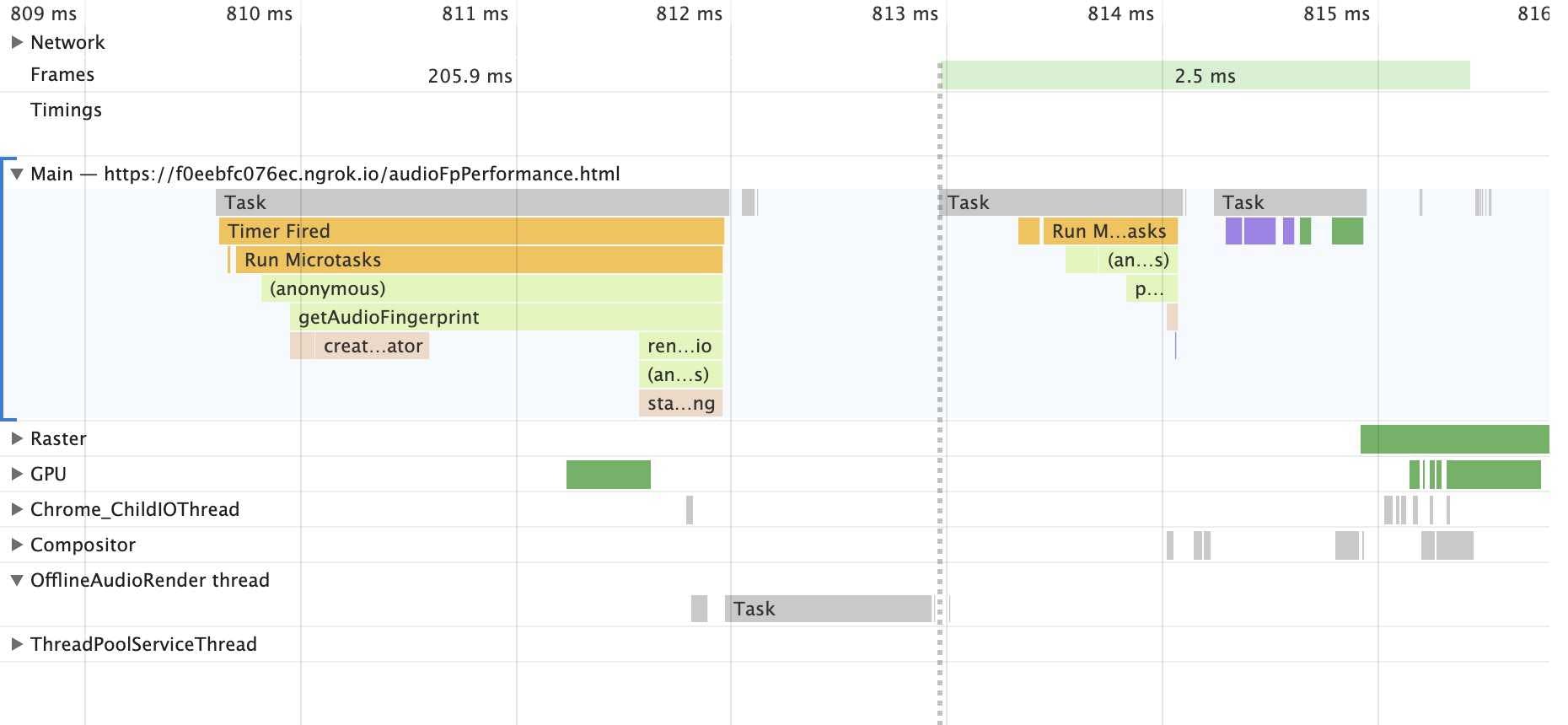

Let’s take a look at what happens under the hood in Chrome during audio fingerprint generation. In the screenshot below, the horizontal axis is time, the rows are execution threads, and the bars are time slices when the browser is busy. You can learn more about the performance panel in this Chrome article. The audio processing starts at 809.6 ms and completes at 814.1 ms:

The main thread, labeled as “Main” on the image, handles user input (mouse movements, clicks, taps, etc) and animation. When the main thread is busy, the page freezes. It’s a good practice to avoid running blocking operations on the main thread for more than several milliseconds.

As you can see on the image above, the browser delegates some work to the OfflineAudioRender thread, freeing the main thread. Therefore the page stays responsive during most of the audio fingerprint calculation.

Web Audio API is not available in web workers, so we cannot calculate audio fingerprints there.

Performance summary in different browsers

The table below shows the time it takes to get a fingerprint on different browsers and devices. The time is measured immediately after the cold page load.

| Device, OS, browser | Time to fingerprint |

| MacBook Pro 2015 (Core i7), macOS 11, Safari 14 | 5 ms |

| MacBook Pro 2015 (Core i7), macOS 11, Chrome 89 | 7 ms |

| Acer Chromebook 314, Chrome OS 89 | 7 ms |

| Pixel 5, Android 11, Chrome 89 | 7 ms |

| iPhone SE1, iOS 13, Safari 13 | 12 ms |

| Pixel 1, Android 7.1, Chrome 88 | 17 ms |

| Galaxy S4, Android 4.4, Chrome 80 | 40 ms |

| MacBook Pro 2015 (Core i7), macOS 11, Firefox 86 | 50 ms |

Audio fingerprinting is only a small part of the larger identification process.

Audio fingerprinting is one of the many signals our open source library uses to generate a browser fingerprint. However, we do not blindly incorporate every signal available in the browser. Instead we analyze the stability and uniqueness of each signal separately to determine their impact on fingerprint accuracy.

For audio fingerprinting, we found that the signal contributes only slightly to uniqueness but is highly stable, resulting in a small net increase to fingerprint accuracy.

You can learn more about stability, uniqueness and accuracy in our beginner’s guide to browser fingerprinting.

Try Browser Fingerprinting for Yourself

Browser fingerprinting is a useful method of visitor identification for a variety of anti-fraud applications. It is particularly useful to identify malicious visitors attempting to circumvent tracking by clearing cookies, browsing in incognito mode or using a VPN.

You can try implementing browser fingerprinting yourself with our open source library. FingerprintJS is the most popular browser fingerprinting library available, with over 12K GitHub stars.

For higher identification accuracy, we also developed the FingerprintJS Pro API, which uses machine learning to combine browser fingerprinting with additional identification techniques. You can sign up for FingerprintJS Pro for free with up to 1,000 monthly unique visitors.

Get in touch

- Star, follow or fork our GitHub project

- Email us your questions at oss@fingerprintJS.com

- Sign up to our newsletter for updates

- Join our team to work on exciting research in online security: work@fingerprintjs.com